Another use case for the NetBeez API

I previously wrote a blog post about using the NetBeez API to retrieve ping results. In that example, I show you how you can use the API to gather network latency and packet loss data between one source agent and a destination network or application. That example is useful to prove whether or not the network is impacting the end-user experience with network services or applications.

In this article, I’ll give you another example of how to use the NetBeez API to gather network performance metrics. Here, I will guide you through the procedure to print the top 10 network agents that have triggered the largest number of alerts in the last 24 hours. What is a network agent? An agent can be a dedicated hardware or software appliance deployed on-prem at a remote branch, or a software endpoint that runs on a user’s desktop or laptop (find more information here). This example can be used to identify the network locations or remote users (e.g. WFH employees) that have experienced the highest number of network performance issues.

Working with the NetBeez API

If you’re interested in testing the following code yourself, please review the API get started section of the article that I previously referenced. There you can find all the steps required to set up the necessary variables to make REST API requests to a BeezKeeper instance.

More documentation about the NetBeez API can be found here: https://api.netbeez.net and for the legacy API which will eventually be fully replaced by the one just mentioned: https://demo.netbeezcloud.net/swagger/.

List the top 10 agents with the most alerts.

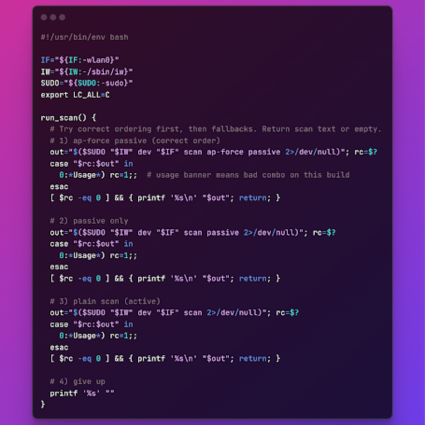

Let’s first see how we can retrieve all the alerts triggered by the NetBeez agents during the past 24 hours. We’ll then group alerts per agent and count them. After that, we’ll sort them and pick the top 10 agents with the largest number of alerts. Lastly, we enrich the data with the agent names by fetching them through some extra API calls. Let’s get started …

First let’s set the timestamps from and to. In this case I pick from 2021-07-05 to 2021-07-06 …

In [13]:

import time

import datetime

to_ts = int(time.time() * 1000)

from_ts = to_ts - (24 * 60 * 60 * 1000)

print(f"From: {datetime.datetime.fromtimestamp(from_ts/1000.0)}")

print(f"To: {datetime.datetime.fromtimestamp(to_ts/1000.0)}")

From: 2021-07-05 03:18:19.669000

To: 2021-07-06 03:18:19.669000

We then retrieve the alerts using the /n_alerts.jsonb legacy api from swagger.

In [14]:

url = f"{base_url}/nb_alerts.json?from={from_ts}&to={to_ts}"

response = requests.request("GET", url, headers=legacy_api_headers, verify=False)

74

df = pd.json_normalize(response.json(), 'current_alerts')

print(df)

id message severity alert_ts state \

0 2129150 Agent back online 6 1625541338616 reported

1 2129149 Agent Unreachable 1 1625541248614 reported

2 2129148 Alert cleared 6 1625541110464 reported

3 2129147 Time out 1 1625541010260 reported

4 2129146 Alert cleared 6 1625540955837 reported

... ... ... ... ... ...

1093 2128056 Alert cleared 6 1625455447521 reported

1094 2128054 Time out 1 1625455328266 reported

1095 2128055 Traceroute max hops reached 1 1625455305708 reported

1096 2128053 Alert cleared 6 1625455258985 reported

1097 2128052 Alert cleared 6 1625455213040 reported

alert_detector_instance_id created_at \

0 30937 2021-07-06T03:15:38.000Z

1 30937 2021-07-06T03:14:08.000Z

2 34566 2021-07-06T03:11:54.000Z

3 34566 2021-07-06T03:10:17.000Z

4 34538 2021-07-06T03:09:17.000Z

... ... ...

1093 34538 2021-07-05T03:24:12.000Z

1094 34538 2021-07-05T03:22:15.000Z

1095 34568 2021-07-05T03:22:41.000Z

1096 34566 2021-07-05T03:21:02.000Z

1097 34538 2021-07-05T03:20:14.000Z

updated_at opening_nb_alert_id closed_ts ... \

0 2021-07-06T03:15:38.000Z 2129149.0 NaN ...

1 2021-07-06T03:14:08.000Z NaN NaN ...

2 2021-07-06T03:11:54.000Z 2129147.0 NaN ...

3 2021-07-06T03:11:54.000Z NaN 1.625541e+12 ...

4 2021-07-06T03:09:17.000Z 2129144.0 NaN ...

... ... ... ... ...

1093 2021-07-05T03:28:15.000Z 2128054.0 1.625456e+12 ...

1094 2021-07-05T03:24:12.000Z NaN 1.625455e+12 ...

1095 2021-07-05T03:26:27.000Z NaN 1.625456e+12 ...

1096 2021-07-05T03:26:59.000Z 2128045.0 1.625456e+12 ...

1097 2021-07-05T03:22:15.000Z 2128051.0 1.625455e+12 ...

target_display_name source_id source_type opening_alert_severity \

0 None 283 Agent 1.0

1 None 283 Agent NaN

2 baidu.com 1688253 NbTest 1.0

3 baidu.com 1688253 NbTest NaN

4 baidu.com 1688218 NbTest 1.0

... ... ... ... ...

1093 baidu.com 1688218 NbTest 1.0

1094 baidu.com 1688218 NbTest NaN

1095 baidu.com 1688255 NbTest NaN

1096 baidu.com 1688253 NbTest 1.0

1097 baidu.com 1688218 NbTest 1.0

source_agent_id nb_target_display_name source_test_target \

0 NaN NaN NaN

1 NaN NaN NaN

2 300.0 Baidu baidu.com

3 300.0 Baidu baidu.com

4 249.0 Baidu baidu.com

... ... ... ...

1093 249.0 Baidu baidu.com

1094 249.0 Baidu baidu.com

1095 300.0 Baidu baidu.com

1096 300.0 Baidu baidu.com

1097 249.0 Baidu baidu.com

source_test_type_id source_nb_target_id source_nb_test_template_id

0 NaN NaN NaN

1 NaN NaN NaN

2 1.0 463.0 2074.0

3 1.0 463.0 2074.0

4 1.0 463.0 2074.0

... ... ... ...

1093 1.0 463.0 2074.0

1094 1.0 463.0 2074.0

1095 4.0 463.0 2076.0

1096 1.0 463.0 2074.0

1097 1.0 463.0 2074.0

[1098 rows x 21 columns]

Then we filter out only the alerts with severity less than 5 (failure alerts have severity 1, and warning alerts have severity 4. When an alert is cleared that event is marked with severity 6). Then count the alerts per agent, and then get the top 10. Please notice that here the agents are referenced by their ID (source_agent_id).

In [15]:

opening_alerts = df[df['severity'] < 5]

count_per_agent = opening_alerts[['source_agent_id', 'severity']].groupby(['source_agent_id']).count()

count_per_agent = count_per_agent.rename(columns={'severity':'count'})

count_per_agent.index = pd.to_numeric(count_per_agent.index, downcast='integer')

top_10 = count_per_agent.nlargest(10, columns='count')

print(top_10)

count

source_agent_id

249 211

300 203

54 19

270 2

279 2

319 2

329 2

341 2

280 1

297 1

To convert the agent IDs to agent names we then retrieve the agent objects one by one and extract the name strings from those objects.

In [16]:

agent_names = []

for agent_id in top_10.index:

url = f"{base_url}/agents/{agent_id}.json"

response = requests.request("GET", url, headers=legacy_api_headers, verify=False)

agent_names.append(response.json()['name'])

print("Done loading names")

Finally, we prepare the data for plotting.

In [17]:

to_plot = pd.DataFrame(index=agent_names, data=top_10.values, columns=['Alert Count'])

to_plot.index.rename('Agents')

print(to_plot)

Alert Count

San Jose 211

San Jose - WiFi 203

Cloud - Google 19

Container - AWS 2

Pittsburgh - Wired 2 2

Pittsburgh - SmartSFP 2

DESKTOP-S87JLNG 2

Pittsburgh - Virtual Agent 2

Pittsburgh - Wired 1

Pittsburgh - WiFi Main 1

In [18]:

to_plot.plot.bar()

Out[18]:

<AxesSubplot:>

Conclusion

I hope this article provided another good example on how to use the NetBeez API to identify users or network locations that are experiencing more issues than others. The code contained herein is available as a Jupyter Notebook on the NetBeez GitHub account and for a live instance of the notebook, you can check out the nb-api binder link.

Lastly, are there other examples that you want me to share when using the NetBeez API? Just drop a note in the comments section!